An AI governance framework refers to the set of policies, procedures, and ethical parameters established to ensure the responsible development, deployment, and use of AI technology like ChatGPT.

This framework guides the implementation of AI relative to human beings, discourages certain use cases like relying solely on ChatGPT for factual information, and recommends approved use cases.

Decades of advancements in generative artificial intelligence (AI) culminated in the development of ChatGPT—an impressive AI-powered language model unleashed onto the world by OpenAI in late 2022.

With McKinsey research showing that generative AI will add $4.4 trillion to the global economy every year, ChatGPT has dramatically impacted and reimagined traditional ways of working—with individuals and businesses worldwide scrambling to navigate this new terrain.

Despite the excitement around this new technology, significant concerns exist about its responsible use and management. These include ethical dilemmas, privacy infringements, bias in algorithms, potential job losses, and the risk of systems making critical decisions without human oversight.

Implementing robust data governance policies around AI is paramount to ensure responsible development, mitigate potential risks, and safeguard societal well-being.

This article will explore the intricacies of ChatGPT governance and show you how to build a framework for responsible AI.

What is an AI Governance Framework?

AI Governance Framework is a set of principles and guidelines determining how artificial intelligence (AI) models such as those underpinning ChatGPT are developed, managed, and used.

It involves decision-making processes such as who can use the AI, what they can use it for, and how its unforeseeable behavior can be controlled.

The framework also incorporates mechanisms for soliciting public input and implementing oversight, guaranteeing the technology’s ethical, equitable, and accountable utilization.

The objective is to mitigate potential misuse, bias, or harm while maximizing the overall benefits and practicality.

Why is ChatGPT AI Governance Important?

As we delve deeper into the era of artificial intelligence, understanding the importance of governing tools like ChatGPT is a wise move.

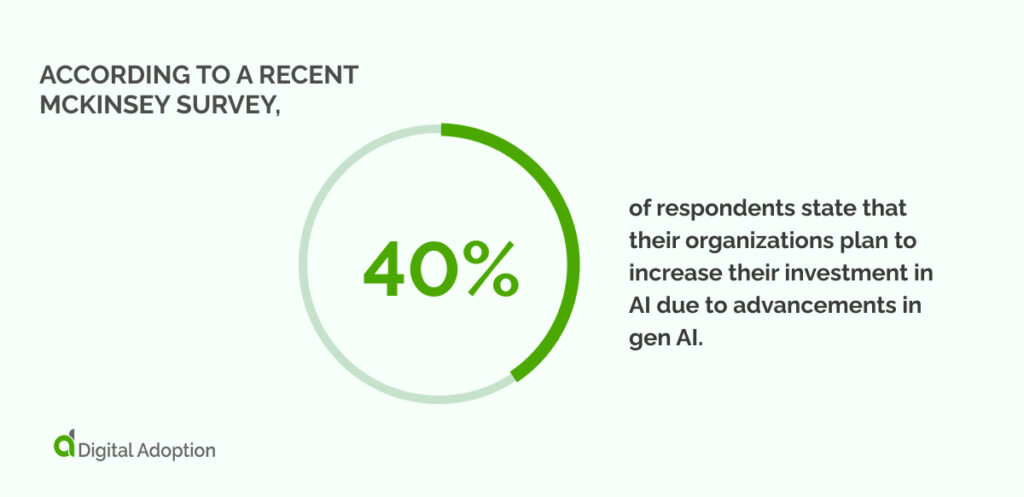

According to a recent McKinsey survey, 40% of respondents state that their organizations plan to increase their investment in AI due to advancements in gen AI.

The unprecedented benefits afforded through AI-driven LLMs such as ChatGPT make it all too easy to overlook the very material risks posed by the technology.

After all, ChatGPT has brought about significant improvements in various sectors, enhancing communication, efficiency, and user experiences.

However, with the potential to breach personal privacy and make critical errors in its current rudimentary and unregulated form, these risks far outweigh the benefits of its unregulated use.

Let’s take a look at why establishing AI governance is critical for operating new AI tech like ChatGPT.

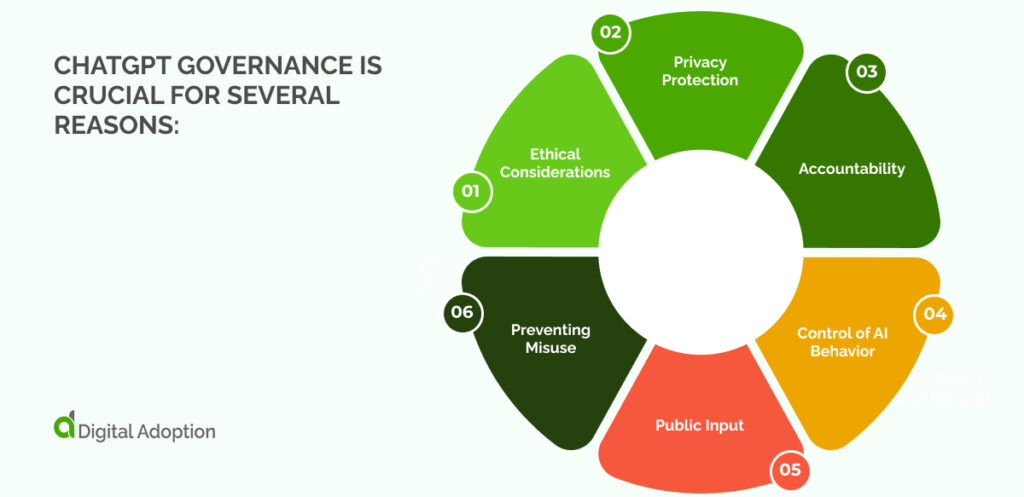

ChatGPT Governance is crucial for several reasons:

- Ethical Considerations: ChatGPT governance ensures the ethical conduct of AI. It sets guidelines that steer AI behavior away from harmful actions such as discrimination or bias. This results in a more fair, respectful, and inclusive interaction with users.

- Privacy Protection: AI models, like ChatGPT, have the potential to handle sensitive information. Strong governance policies are needed to safeguard user data, ensuring that private conversations stay private and that personal data is not misused. This fosters trust and enhances user adoption and customer experience.

- Accountability: Governance establishes clear lines of responsibility for AI actions. If an AI makes an error or causes harm, governance structures determine who is accountable. This clarity is vital to ensure mistakes are promptly addressed and lessons learned to prevent future mishaps.

- Control of AI Behavior: Governance isn’t just about rules; it’s also about influence. It provides mechanisms to guide AI behavior, ensuring it aligns with human values and societal norms. This control enables us to shape AI to benefit society and respect individual rights.

- Public Input: Good governance involves public participation in decision-making processes around AI usage. By incorporating diverse perspectives, we can ensure that AI serves the needs of all users and stakeholders, promoting transparency, fairness, and trust in the system.

- Preventing Misuse: Governance in ChatGPT helps prevent malicious or irresponsible use of AI. By setting clear limits on what constitutes acceptable use, governance creates a safer, more responsible digital environment. This protection is especially important as AI increasingly integrates into our daily lives.

Risks and Concerns Surrounding ChatGPT

The development and implementation of AI governance frameworks are necessary to promote the ethical, fair, and inclusive use of AI systems.

While ChatGPT offers numerous benefits, such as enhanced productivity and improved user experiences, there are real risks that need to be acknowledged and mitigated.

Let’s explore some of the most pressing risks and concerns presented with the use of AI tools like ChatGPT.

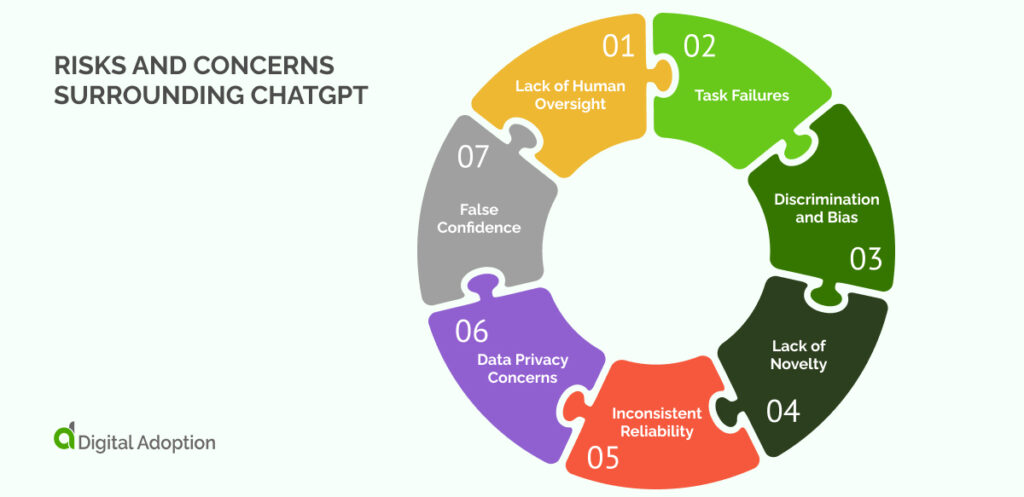

Lack of Human Oversight

Without adequate human oversight, critical decision-making by AI systems poses significant risks. The absence of human intervention and accountability can lead to detrimental consequences in high-stakes scenarios. Autonomous vehicles, healthcare systems, and other domains reliant on AI face the danger of unchecked autonomy.

Implementing mechanisms in AI governance frameworks such as auditing, accountability, and transparency can help ensure responsible deployment while striking a balance between AI capabilities and human judgment.

Task Failures

Unregulated AI systems, including ChatGPT, are not infallible and can fail to perform well in certain tasks or domains. While they demonstrate impressive capabilities, they have limitations that must be acknowledged. These limitations can result in misleading or unsatisfactory outcomes for users.

Discrimination and Bias

Unregulated AI, including ChatGPT, carries the inherent risk of perpetuating biases and discrimination. AI models learn from vast amounts of data—which can reflect unfortunate societal biases. When left unchecked, these biases can manifest in AI-generated content, leading to discriminatory outcomes and the exclusion of marginalized groups.

Lack of Novelty

Unregulated AI systems often struggle to provide unique and up-to-date information. They may generate redundant or stale responses, limiting their usefulness and value to users.

Without proper regulation and updates, AI models like ChatGPT can fail to keep pace with rapidly evolving information sources and user expectations. Encouraging continuous research and development to enhance AI’s ability to produce novel and timely responses is crucial in avoiding monotony and ensuring the relevance of AI systems.

Inconsistent Reliability

Unregulated AI systems often exhibit inconsistent reliability, making it challenging for users to predict their performance. While they may excel in some interactions, they can fall short in others.

This unpredictability hampers user trust and satisfaction. It is crucial to establish standards and regulations that ensure consistent and reliable performance across different scenarios, providing users with a more trustworthy and dependable AI experience.

Data Privacy Concerns

Unregulated AI raises significant concerns regarding data privacy. Users interact with AI systems like ChatGPT, often sharing personal or sensitive information.

Without proper regulations and safeguards, there is a risk of mishandling or unauthorized access to user data, leading to privacy breaches and potential harm to individuals. Stricter data protection measures, transparency about data handling practices, and adherence to privacy regulations are essential to establish trust and ensure the security of user information within AI frameworks.

False Confidence

Unregulated AI systems like ChatGPT are prone to generating false information and exhibiting unwarranted confidence. This poses significant risks, as users may unknowingly receive inaccurate or misleading responses. This overconfidence can lead to the spread of misinformation, potentially causing harm and eroding trust in AI systems.

What ChatGPT Governance Frameworks Should Businesses Be Aware Of?

Before creating your unique governance framework, it’s worthwhile to consider some notable AI governance frameworks that have already been established.

These frameworks serve as guiding principles, ensuring that ethical standards and legal requirements are upheld.

By adhering to these guidelines, AI tools like ChatGPT can be effectively safeguarded, providing a secure and responsible environment for its inevitable use.

Singapore’s Model AI Governance Framework

Singapore’s thriving tech ecosystem has propelled it to the forefront of global innovation. As the country solidifies its position as a tech hub, consumer confidence in emerging technologies, including AI, is soaring.

Published by the Personal Data Protection Commission (PDPC), Singapore’s model for AI governance aims to provide guidance for responsible AI development and deployment. It has been well-received and serves as a step forward in promoting ethical and accountable AI practices globally.

The framework aims to address various aspects of AI governance, including transparency, explainability, safety, security, fairness, and data management. It is designed to guide both private and public sector organizations in Singapore in their ethical and accountable use of AI.

These principles can help inform other organizations in deploying AI responsibly and ethically, fostering trust and accountability in the technology.

NIST’s AI Risk Management Framework

In 2023, the National Institute of Standards and Technology (NIST) introduced its AI Risk Management Framework. This framework serves as a comprehensive guide for organizations to manage risks associated with AI systems.

The adaptability of this framework is a key feature, making it suitable for various use cases and organization types. It underlines the importance of trustworthiness in AI technologies, acknowledging that public trust is a vital component for these systems to reach their full potential.

Additionally, the framework was developed through a consensus-driven, open, transparent, and collaborative process, making it a product of collective intelligence from both private and public sectors

KWM AI Governance Framework

King & Wood Mallesons’ AI Governance Framework is designed to implement AI principles. It provides a set of policies and procedures that ensure AI technologies are used responsibly.

The framework provides valuable guidance for organizations navigating the legal and ethical challenges associated with AI usage. It focuses on critical aspects such as transparency, accountability, and data privacy, ensuring responsible and ethical AI deployment.

Applying this framework to AI models like ChatGPT enables organizations to align their AI systems with the highest ethical standards. This approach fosters trust and promotes the responsible use of AI technology.

American Action Forum’s (AAF) AI Governance Framework

The American Action Forum’s AI Governance Framework addresses the growing need for guidelines governing AI systems.

The AAF’s AI Governance Framework is a significant development in the governance landscape of artificial intelligence. Introduced with the intent to pre-emptively address potential harms from AI, the framework lays down principles for the development, use, and post-deployment monitoring of AI systems.

It aims to navigate the increasingly complex landscape of AI, stressing on the need for transparency, security, and accountability. The mission objective of this framework is clear – to guide the ethical and beneficial development of AI in a manner that serves all sections of society, striking a balance between innovation and regulation.

NYU Compliance & Enforcement’s AI Governance Framework

The New York University’s AI Governance Framework focuses on determining which kinds of models, algorithms, big data systems, and AI applications will be covered.

It offers invaluable insights derived from utilizing advanced AI systems like ChatGPT, providing valuable lessons on responsibly managing and governing these cutting-edge technologies. The comprehensive framework also places a strong emphasis on the crucial aspects of compliance and enforcement in the ethical use of AI.

Addressing these key elements enables organizations to ensure the responsible and effective deployment of AI, which drives positive and impactful outcomes.

What Laws Inform AI Governance?

Navigating the legal landscape for generative AI technologies like ChatGPT involves understanding key regulations such as the Federal Rules of Civil Procedure, the proposed EU AI Act, existing European laws, and various global privacy and fairness laws.

However, the cross-section of applicable AI governing laws may complicate compliance given the wide-scoping and still unrealized capabilities of tools like ChatGPT. AI regulations vary across many regulatory bodies worldwide—and given AI’s recent entrance into the social zeitgeist, further clarification and enforcement is imperative.

Let’s take a closer look at a few AI regulations:

Federal Rules of Civil Procedure: Primarily, these rules guide the conduct of all civil lawsuits in United States federal courts. They may impact AI like ChatGPT if, for example, a lawsuit arises due to the misuse of the technology or a dispute over its deployment. The rules govern areas such as personal jurisdiction, which could influence where a lawsuit can be filed against the developers or users of ChatGPT. They provide guidance on issues like discovery, which could affect the information that parties must share during a legal dispute.

As of now, the United States does not have solid AI governance laws in place. However, there are ongoing discussions and initiatives at both federal and state levels to regulate AI technology. The Office of Science and Technology Policy (OSTP) has proposed a Blueprint for an AI Bill of Rights, which outlines principles and practices to safeguard individual rights in the context of AI.

Existing European Laws:

EU AI Act: This regulation, proposed by the European Commission, aims to create a legal framework for “high-risk” AI systems, ensuring that they are safe and respect existing laws on fundamental rights and values. It outlines obligations for AI system providers, users, and third-party suppliers.

To minimize discrimination, the Act covers transparency, accountability, user redress, and high-quality datasets. If passed, it could significantly impact the development, deployment, and use of AI systems like ChatGPT within the European Union.

Regulations like the General Data Protection Regulation (GDPR) require explicit user consent for data collection for those in the EEA, enabling mechanisms that provide individuals with control over their personal data.

However, the enforcement of GDPR for AI governance in Europe faces challenges due to varying parameters and nuances across countries. Regulatory bodies consider factors like user type, geo-location, data collected, and purpose for collection. This can result in different applications of legal procedures for AI regulation, making consistent enforcement difficult.

Until specific legislation is enacted for cutting-edge AI technology like ChatGPT, European countries will likely apply their existing legal frameworks.

Intellectual property laws might also be relevant, especially when AI creates original content. Moreover, consumer protection laws could be used to safeguard users from potential harm or misleading practices associated with the use of AI.

Nonetheless, striking a balance between privacy protection and innovation remains an ongoing challenge.

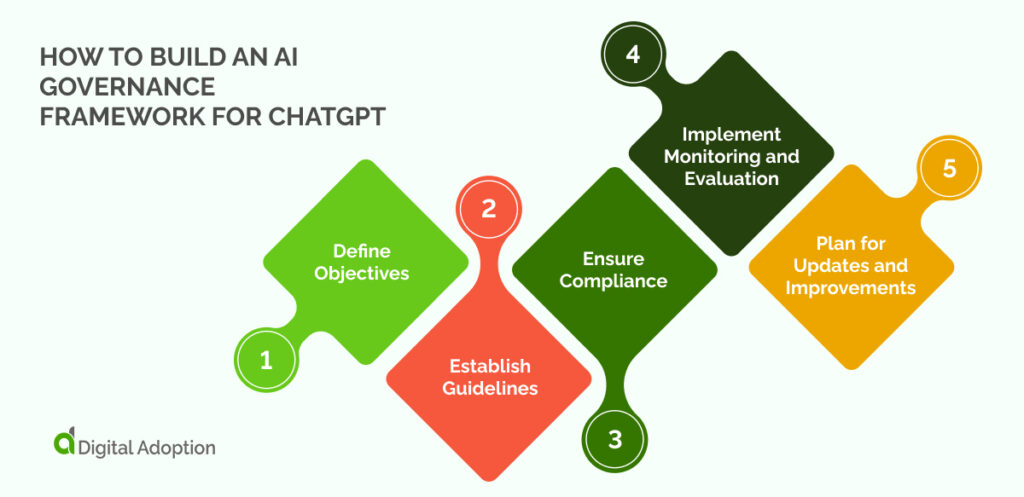

How To Build An AI Governance Framework for ChatGPT

Define Objectives

For those implementing ChatGPT or other AI-driven applications into existing business operations, setting clear, specific, and measurable objectives that align with your broader business strategy is key.

For instance, if your goal is to improve customer service, one objective could be to reduce response time to customer inquiries by 20% within the first three months. This objective will help you track and measure the effectiveness of ChatGPT in achieving your goal.

On the other hand, if you aim to drive engagement, you might set an objective to achieve a 15% increase in user interaction within six months of implementing ChatGPT. By defining these objectives, you can better evaluate the impact and success of ChatGPT in meeting your desired outcomes.

Establish Guidelines

Guidelines are like the comprehensive rulebook for how ChatGPT engages with users.

They establish the tone of voice that aligns with your brand identity, define the appropriate language usage, and provide a framework for handling sensitive topics.

Following these guidelines will help maintain a uniform and smooth communication experience, enhancing the user journey. The goal is to foster an environment where users feel assisted, educated, and empowered during their interactions with ChatGPT.

Ensure Compliance

Compliance with data protection and privacy laws is of utmost importance to ensure the safety and trust of users. When it comes to ChatGPT, it is crucial to strictly collect and process only the necessary user data while implementing robust security measures to safeguard this data.

Failure to comply with these regulations exposes you to potential legal penalties and poses a significant risk to your brand’s reputation and customer trust, which can have long-lasting consequences. Therefore, prioritizing data protection and privacy is a legal obligation and a strategic imperative for your organization.

Implement Monitoring and Evaluation

To gain a comprehensive understanding of the effectiveness of ChatGPT, it is crucial to establish a consistent practice of monitoring and evaluating its performance. This entails examining key metrics such as user satisfaction rates, engagement rates, and problem resolution times.

Examining these metrics allows us to gain crucial insights, highlighting both ChatGPT’s strengths and areas needing further refinement. This careful evaluation process helps us persistently seek improvement and provide the best possible user experience.

Plan for Updates and Improvements

As technology advances and user needs evolve, it becomes increasingly crucial to have a well-defined plan for updating and improving ChatGPT. This plan should encompass various strategies, including actively seeking and incorporating user feedback, staying abreast of emerging technology trends, and adapting to changes in business needs.

Regular updates and improvements enable ChatGPT to stay pertinent and achieve its goals in a constantly evolving landscape. This cyclical process keeps ChatGPT at the cutting edge of AI-powered conversational assistance, ensuring top-tier user experiences while adapting to the changing needs of its users.

What’s Next For ChatGPT Governance?

The future of AI heavily relies on both the governance of ChatGPT, ensuring ethical AI usage, and data transformation, critical for refining system learning and response accuracy, as they together pave the way towards a more dependable and user-friendly AI experience.

The emphasis will be on enhancing data privacy and trust, increasing transparency, and implementing ethical AI practices. A strong feedback system will be a key component, along with initiatives to ensure universal accessibility and inclusivity.

Regular evaluations and updates will keep pace with the rapidly changing AI environment, while robust dispute resolution mechanisms will address any arising issues.

All these strategies are geared towards providing a secure, dependable, and user-friendly AI experience.