Large language models (LLMs) like ChatGPT are here to stay, so why not use the wide variety of applications they offer?

One of the applications for LLMs is to use them in combination with transfer learning, which is using NLP machine learning from one topic to improve knowledge on another.

The global market value of LLMs is likely to grow to $63 billion by 2030, so now is the time to leverage this technology in every way you can to guarantee digital resilience for the future as part of your essential AI digital adoption.

In this blog, we delve into the essential steps for implementing transfer learning from large language models and explore the following:

- What is transfer learning from large language models?

- Advantages of transfer learning from LLMs.

- Use cases for transfer learning from large language models.

What is transfer learning from large language models?

To understand transfer learning from large language models, we first need to break it down into two components, transfer learning and large language models, and then combine them.

Transfer learning

Transfer learning is a machine learning technique that enables the reuse of knowledge gained from one task to improve performance on another task using related task-specific data.

Large language models

A large language model (LLM) is an artificial intelligence (AI) algorithm that leverages deep learning techniques and massive data sets to comprehend, condense, generate, and anticipate fresh content.

Transfer learning from large language models

Transfer learning from large language models involves using skills and knowledge acquired by an LLM like ChatGPT or Google Bard on various tasks and training data and applying this knowledge to a domain or task via named entity recognition (NER).

Another way of thinking about it is that it allows a model to use its understanding of context and language from one task to optimize its performance for another to help you begin reimagining digital transformation.

4 Steps for applying transfer learning from large language models (LLMs)

Implementing transfer learning from large language models requires a systematic approach involving the steps below.

1. Identify use cases

Before implementing transfer learning, it’s essential to identify specific use cases where it can be beneficial.

This process involves understanding the problems or tasks that can benefit from transfer learning, such as text classification, language generation, or sentiment analysis.

Determine specific areas within your contact center operations where transfer learning could enhance efficiency or customer experience.

This step could include one of the following:

- Personalized responses.

- Multilingual support.

- Automated responses.

- Knowledge base creation.

Clearly defining the use cases makes choosing the appropriate pre-trained language model easier and fine-tuning it for the specific application.

2. Data collection and annotation

Once you have identified use cases, the next step is to collect and annotate the relevant data. This step involves gathering large text data representative of the target domain or task.

Additionally, you must annotate the data with labels or tags that indicate the desired outputs for the model.

Proper data collection and annotation are crucial for training an effective language model through transfer learning.

3. Model fine-tuning

Model fine-tuning involves taking a pre-trained language model, such as GPT-3 or BERT, and adapting it to the specific use case or domain.

This process typically involves updating the model’s parameters using the annotated data collected earlier.

Fine-tuning the model allows it to learn the specific nuances and patterns relevant to the targeted application, resulting in improved performance and accuracy.

4. Continuous improvement

Transfer learning from large language models is not a one-time process. Continuous improvement is crucial for maintaining the model’s effectiveness over time.

This action involves monitoring the model’s performance, identifying areas for improvement, and updating the fine-tuned model as new data becomes available.

Additionally, staying updated with the latest advancements in language models and transfer learning techniques is vital for ensuring continuous improvement in model performance.

Organizations, especially contact centers, can unlock unprecedented possibilities for elevating customer interactions, optimizing workflows, and making data-driven decisions by implementing transfer learning from large language models.

Embracing the power of transfer learning allows businesses to gain a competitive edge in delivering exceptional customer experiences in the digital age.

Advantages of transfer learning

There are many advantages to utilizing transfer learning, which include those in the list below.

Higher efficiency

Transfer learning offers a more efficient approach to model training, eliminating the need for extensive data and computational resources. Instead of starting from scratch, you can leverage a pre-trained model and fine-tune it to suit your requirements.

Enhanced generalization

Language Models (LLMs) possess a broad knowledge base from their pre-training phase. This comprehensive understanding allows them to recognize and apply diverse language patterns to various tasks, enhancing their adaptability.

Better limited data functionality

Transfer learning empowers models to perform well on tasks, even when limited task-specific data is available. This capability proves particularly advantageous in niche or specialized domains.

For instance, you can create a customer support chatbot by fine-tuning a pre-trained LLM that has already learned grammar rules and sentence structures. The model will effortlessly understand and generate responses, requiring less data than training a model from scratch.

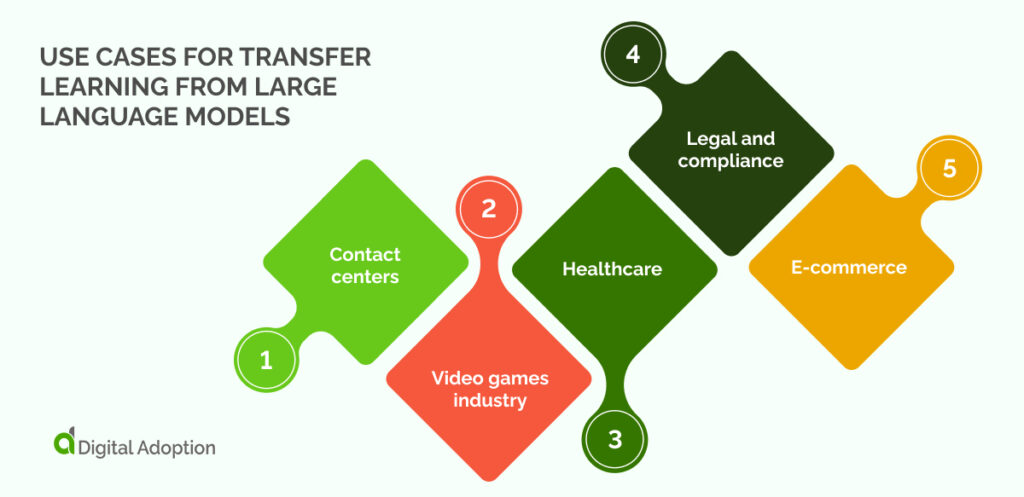

Use cases for transfer learning from large language models

Many use cases exist for using LLMs alongside transfer learning in a contact center environment and video gaming, legal, healthcare, and finance.

Contact centers

Leveraging large language models (LLMs) through transfer learning enhances the call center efficiency you need to compete against other enterprises.

In dynamic customer service, LLMs personalize responses, offering human-like interactions, saving money and avoiding decreases in the quality of these interactions, keeping customers satisfied.

Transfer learning facilitates multilingual support, ensuring seamless communication for customers from nearly any country.

It also optimizes workflows, automating routine responses with AI-powered chatbots using LLMs contribute to knowledge base creation, providing consistent information.

And as a final but crucial point, they guide predictive analytics for informed decision-making in contact centers.

Video games industry

In gaming, models developed for specific games, like DeepMind’s AlphaGo, can find applications in others, like chess, for video game studios. Transferring knowledge between gaming models is crucial, reducing the time required to create new models for diverse games in low-cost ways that keep products stimulating for users.

Another example is MadRTS, a real-time strategy game for military simulations. It employs CARL (case-based reinforcement learning), bridging case-based reasoning and reinforcement learning to achieve tasks.

This efficiency strategy frees up time for developers to focus on higer level tasks essential to gamer engagement.

Case-based reasoning tackles unseen but related problems using past experiences, while reinforcement learning approximates different situations based on the agent’s expertise.

In this scenario, the two modules utilize transfer learning to elevate the overall gaming experience for MadRTS players.

Healthcare

There are many applications for transfer learning in the healthcare sector.

One of these uses electromyographic (EMG) signals to assess muscle response, which shares similarities with electroencephalographic (EEG) brainwaves. Consequently, both EMG and EEG signals can leverage transfer learning for tasks like gesture recognition.

In addition, transfer learning proves beneficial in medical imaging. For instance, models trained on MRI scans can effectively detect brain tumors from images of the brain.

Transfer learning can also facilitate the deployment of machine learning models in small datasets, which are usually impractical for training from scratch. This can benefit studying rare diseases or minority groups in digital adoption healthcare research, irrespective of data type.

Legal and compliance

Automated document analysis and legal research can enhance understanding of more straightforward legal language and context to support clients to successful cases in law firms.

2023 academic studies show that transfer learning is helpful for accurate yet low-resource legal case summarization, saving resources and time while maintaining the accuracy essential to legal firms’ reputation and sustainability. This use case allows judges and lawyers to focus on higher-level tasks.

The task at hand involves constructing an automatic summarization system for legal reports to enhance human productivity and address the limited availability of legal corpora.

Text summarization has two main approaches: extractive, selecting key sentences, and abstractive, summarizing by rephrasing and introducing new words.

Transfer learning also has applications for enterprise companies, as HR staff can use it to summarize legal compliance reports to make them easier to understand and implement for C-suites.

E-commerce

In e-commerce, monitoring and analyzing customer behavior is essential for driving sales. Transfer learning enables organizations to focus on subjective customer experiences through sentiment analysis as part of a customer experience transformation.

Doing so includes understanding likes, dislikes, interests, views, and preferences for specific products or services. It empowers businesses to delve deeper into feedback and reviews, gaining insights into users’ emotions.

Presently, e-commerce companies employ automated tools for sentiment classification, converting user opinions into texts categorized as positive, negative, or neutral.

Enterprises can devise tailored strategies to enhance the shopping experience through this analysis.

Sentiment classification is widely utilized on social media handles, where businesses mine, process, and extract conversations and opinions to grasp user sentiments better.

Combine two technologies to unlock the power of both

Combining large language models with transfer learning holds the potential to unlock unprecedented capabilities in natural language processing as one of the biggest IT strategic trends of 2024.

Organizations can harness the power of both approaches by leveraging the immense knowledge stored in pre-trained models and adapting them to specific tasks through transfer learning.

This synergy accelerates model training and enhances the understanding and generation of human language, revolutionizing various applications across industries to maintain business success and unlock further innovation.

![18 Examples of AI in Finance [2025]](https://www.digital-adoption.com/wp-content/uploads/2025/06/18-Examples-of-AI-in-Finance-2025-300x146.jpg)

![14 Examples of AI in Manufacturing [2025]](https://www.digital-adoption.com/wp-content/uploads/2025/06/14-Examples-of-AI-in-Manufacturing-2025-300x146.jpg)

![29 Examples of AI in Education [2025]](https://www.digital-adoption.com/wp-content/uploads/2025/06/29-Examples-of-AI-in-Education-2025-300x146.jpg)

![15 Examples of AI in Retail [2025]](https://www.digital-adoption.com/wp-content/uploads/2025/06/15-Examples-of-AI-in-Retail-2025-300x146.jpg)

![13 Examples of AI in Healthcare [2025]](https://www.digital-adoption.com/wp-content/uploads/2025/06/AI-in-healthcare-examples-300x146.jpg)

![18 Examples of AI in Finance [2025]](https://www.digital-adoption.com/wp-content/uploads/2025/06/18-Examples-of-AI-in-Finance-2025.jpg)

![14 Examples of AI in Manufacturing [2025]](https://www.digital-adoption.com/wp-content/uploads/2025/06/14-Examples-of-AI-in-Manufacturing-2025.jpg)

![29 Examples of AI in Education [2025]](https://www.digital-adoption.com/wp-content/uploads/2025/06/29-Examples-of-AI-in-Education-2025.jpg)