Rarely is there a technology like generative AI that generates so many users in record time.

The plus side is the powerful abilities it grants to organizations for automation and customer success. Still, the downside is generative AI risks, which you must consider before AI digital adoption.

In January 2023, ChatGPT, the generative AI tool, became the most popular IT strategic trend and recorded five million users in the first week of release.

It is essential to ask whether these users and subsequent users of generative AI tools considered the risks before making this tool part of their daily workflow.

To help you understand the top ten generative AI risks and learn how to stay safe, we will explore the following topics:

- How significant are generative AI risks?

- What are the top 10 generative AI risks?

- How do you stay safe from generative AI risks?

- What use cases show the risks of generative AI?

- How will generative AI impact the business world in the future?

- How significant are generative AI risks?

- What are the top 10 generative AI risks (with examples)?

- How do you stay safe from generative AI risks?

- What use cases show the risks of generative AI?

- How will generative AI impact the business world in the future?

- Stay ahead of generative AI risks with proactive measures

How significant are generative AI risks?

Significant generative AI risks depend on a few factors, but it mainly relates to how the user interacts with the tool, their knowledge, and what data they input.

When establishing the significance of these risks in your organization, ask yourself these questions:

- How familiar is our organization with the usage terms of the generative AI tool we are considering?

- Do we enter any sensitive employee or customer data into generative AI tools?

- Are we trained on how to use generative AI safely and avoid risks, and have we looked at artificial intelligence statistics so we understand its capabilities?

- Do we have a usage policy on using generative AI and dealing with potential risks?

- What special considerations should we make based on our field, such as ChatGPT insurance or ChatGPT sales?

Consider these questions to establish the significance of the risks of generative AI to your organization.

What are the top 10 generative AI risks (with examples)?

Generative AI: The Big Risks (and Some of the Best Uses)

There are ten generative AI risks. See these risks below, with examples to illustrate what they look like, allowing you to prepare for them as you encounter them.

1. Data and security leakage

Inputting sensitive third-party or internal company information into ChatGPT integrates it into the chatbot’s data model, exposing it to others with relevant queries.

Doing so poses the risk of data leakage and could violate an organization’s data retention policies.

Example

Avoid sharing details about an upcoming product, including confidential specifications and marketing strategies, that your team is assisting a customer in launching with ChatGPT. This precaution helps mitigate the potential for data leakage and security breaches.

2. Intellectual property complexities

Deciphering ownership of code or text generated by ChatGPT can be complex. As per the terms of service, the output is the responsibility of the input provider.

However, complications may arise if the output includes legally protected data from other inputs that may not follow AI compliance practices.

Copyright problems may also emerge if generative AI is used to create written material derived from copyrighted property.

Example

Consider a scenario where a user requests ChatGPT to create marketing material, resulting in an output that includes copyrighted content from external sources.

These outputs lack proper attribution or permission, posing a potential risk of infringing upon the intellectual property rights of the original content creators.

Such actions could result in legal consequences and damage the company’s reputation.

3. Limitations on AI development

Some generative AI’s terms of service explicitly forbid developers from using it to build other AI systems.

Such usage could hinder future AI development initiatives, primarily if the company operates in that domain.

Example

Imagine a company specializing in voice recognition technology aiming to enhance its current system by integrating ChatGPT’s natural language processing capabilities.

The explicit prohibition in ChatGPT’s terms of service challenges realizing this enhancement within the specified restrictions.

4. Open-source license compliance

Suppose some generative AI utilizes open-source libraries and incorporates that code into products. In that case, there’s potential for violating Open Source Software (OSS) licenses like GPL, leading to legal complications for the organization.

Example

For example, suppose a company employs ChatGPT to generate code for a software product, and the origin of the GPT training data is unclear. In that case, there’s a risk of violating terms in open-source licenses associated with that code.

This action may result in legal complexities, including accusations of license infringement and the potential for legal action from the open-source community.

5. Confidentiality and liability concerns

Revealing confidential customer or partner information may violate contracts and legal obligations.

If ChatGPT’s security is compromised, exposing confidential content poses risks, endangering the organization’s reputation and leading to legal liabilities.

Another risk involves staff using ChatGPT without proper training or IT approval employing shadow IT or shadow AI practices. This action makes monitoring and regulating the AI tool’s usage challenging.

Example

Imagine a healthcare organization using ChatGPT for patient inquiries.

Sharing confidential patient details, like medical records, with ChatGPT may violate legal obligations and infringe on patient privacy rights under laws like HIPAA in the United States.

6. Research bias

AI system biases can stem from various sources, including skewed training data, flawed algorithms, and unconscious human biases. These biases may yield discriminatory or unjust outcomes, harming users and undermining trust in AI solutions.

AI systems like ChatGPT may manifest inaccurate information due to demographic, confirmation, and sampling biases.

Example

For instance, if historical business data carries gender bias, ChatGPT might inadvertently perpetuate these biases in responses.

This bias can distort representations of research output and result in low-quality research regarding an enterprise’s recruitment and retention history.

In chatbot applications, ChatGPT may provide inaccurate information to customers.

7. Unclear international law privacy and compliance

Leveraging generative AI’s capabilities, malicious actors can exploit it to craft malware, create content for phishing attacks and scams, and execute cyber assaults with dark web-sourced data.

Example

For example, ChatGPT could collaborate with bots to generate deceptive fake news articles and misleading content, deceiving readers.

8. Crime-as-a-service (CaaS)

Generative AI could accelerate the creation of no-cost malware, making crime-as-a-service more convenient and profitable.

Example

For instance, cybercriminals might misuse ChatGPT to generate abundant spam messages, overwhelming email systems and disrupting communication networks.

9. Model vulnerability

Model security is crucial as AI faces vulnerabilities, including adversarial attacks.

Example

Attackers manipulate inputs to yield incorrect outputs, risking significant consequences. Designing and developing secure models is vital to resist such threats.

10. Explainability

AI systems, at times, obscure decision processes, complicating understanding. Transparency absence breeds mistrust, challenging technology credibility.

Example

When you use a chatbot to streamline your customer service but don’t know why ChatGPT leads customers to specific undesirable answers, it makes it difficult to understand how to redirect how this tool guides customers.

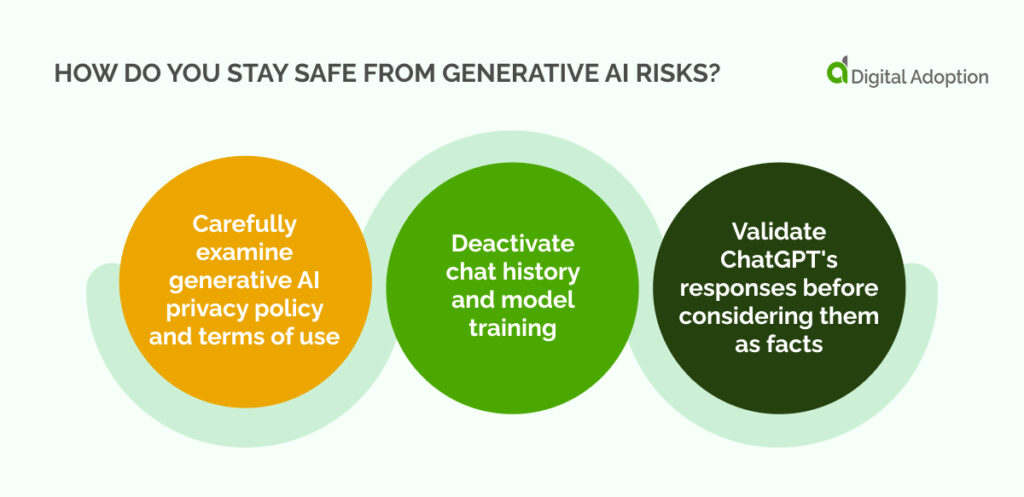

How do you stay safe from generative AI risks?

Follow these strategies to stay safe from generative AI risks.

To ensure safety using generative AI, follow these five strategies, starting by regularly reviewing the privacy policy and terms of use.

1. Carefully examine generative AI privacy policy and terms of use

Carefully review ChatGPT’s privacy policy and terms of use before sharing personal information with generative AI tools.

Bookmark these documents for regular reference, as they might change without prior notice.

Refer below for a list of privacy policies and terms of use for the most popular generative AI tools:

- BARD generative AI additional terms of service

- PaLM generative AI additional terms of service

- Claude terms and conditions

- ChatGPT privacy policy

2. Deactivate chat history and model training

Safeguard your ChatGPT conversations by turning off chat history and model training in your ChatGPT account.

Opt out of using your data for OpenAI’s model training by clicking the three dots at the bottom, going to Settings > Data controls, and switching off “Chat history & training.”

Remember that even with opting out, your chats are stored on OpenAI’s servers for 30 days, accessible to staff for abuse monitoring.

3. Validate ChatGPT’s responses before considering them as facts

ChatGPT may unintentionally produce inaccurate information, commonly called “hallucination.”

If you plan to depend on ChatGPT’s answers for critical matters, validate its information with authentic citations through thorough research.

What use cases show the risks of generative AI?

It is helpful to examine risk use cases for generative AI to understand how these risks work in practice, as it will help you combat these risks and stay safe.

Use case 1: Data scientists

Generative AI allows data scientists to produce and share data to train models without risking personal information.

Risks for this use case

Generative AI introduces data poisoning, deobfuscation, and adversarial machine learning threats.

The associated risk relates to the reverse-engineered synthetic data generation model, allowing adversaries to identify the source data used.

Use case 2: IT

Programmers use large language models (LLMs), a form of generative AI, to find errors in code and automatically generate documentation.

Risks for this use case

This step introduces data exfiltration, data leakage, and data integrity threats, while documentation produced can risk revealing important system details that a company wouldn’t usually disclose.

Use case 3: Marketing

AI text generators allow marketers to produce rough copy drafts for campaigns instantaneously.

Risks for this use case

Doing so introduces data leakage, data exfiltration, and competitive intelligence threats. Risks include public relations/client issues related to the release of text due to poor oversight and governance processes before release.

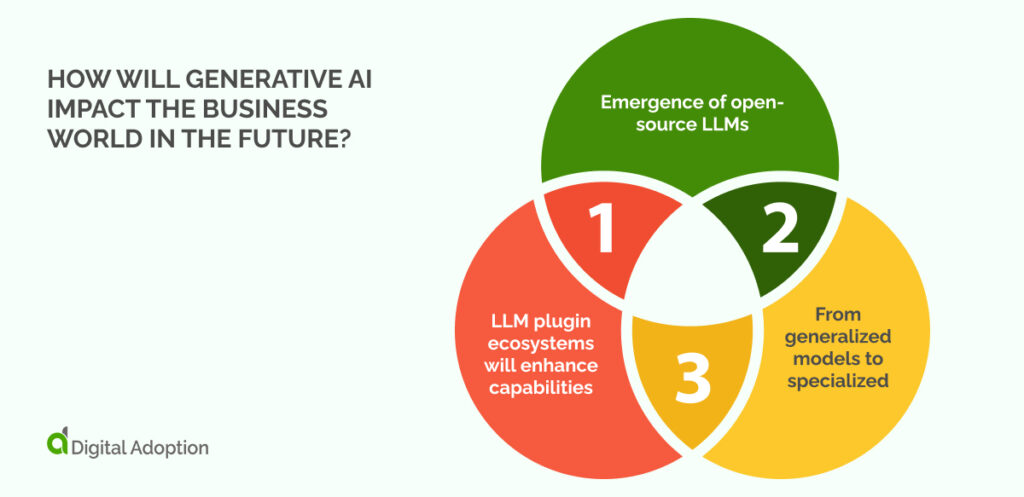

How will generative AI impact the business world in the future?

Generative AI is a relatively new technology, but it has become popular due to its cutting-edge capabilities, which will evolve quickly.

Consider how generative AI will impact the future to remain competitive.

LLM plugin ecosystems will enhance capabilities

Generative AI providers, such as OpenAI, are introducing plugins to enhance the core capabilities of LLMs, tailoring them to specific tasks.

The plugin ecosystems facilitate AI integration into workflows, streamlining the deployment of AI-based solutions for diverse applications.

Emergence of open-source LLMs

Open-source LLMs are on the rise, offering alternatives to the initial proprietary models from OpenAI and others.

For CIOs, it means increased control over data and AI operations, but it necessitates heightened expertise in model management, maintenance, governance, and hardware infrastructure.

From generalized models to specialized

The initial surge of generative AI introduced versatile models proficient across various tasks but faced challenges in specific domains.

As generative AI focuses on specific industries, it will create purpose-specific models, enhancing proficiency in fields like banking, insurance, and HR by aligning with the unique language of these specialized areas.

Stay ahead of generative AI risks with proactive measures

Proactive measures are paramount to stay ahead of generative AI risks.

These measures involve thorough reviews of privacy policies, deactivating chat history, and verifying responses for accuracy.

Embracing transparency, avoiding sensitive information input, and understanding model ownership contribute to a robust defense.

Stay vigilant, navigating the evolving landscape with informed precaution, and you will reduce the negative impact of generative AI risks while reaping their benefits.